Synthetic Media and Deepfakes – Where Can Fun Turn Into Harm?

There's a fine line between deepfakes and synthetic media. How can we keep from crossing it?

The terms deepfake and synthetic media are not the same. Even though machine learning, generative adversarial networks, and artificial intelligence lie at the heart of deepfake and synthetic media content generation, there are two significant differences – intent and attribution.

What is a deepfake?

The term deepfake refers to a hyper-realistic “fake” video, image, or voice created with deep learning technology with the intent of misleading or manipulating a viewer. An AI algorithm generates fakes and another AI algorithm challenges its efforts in a process called a generative adversarial network, teaching the synthesis engine to make a better resulting piece of media. Anonymous users or bots can produce and spread deepfakes, hiding the traces of the content’s origin.

Some would call this content a “deepfake” since the result is not an accurate depiction of the real situation. However, negative intent and lack of attribution are what actually make a deepfake a “deepfake”.

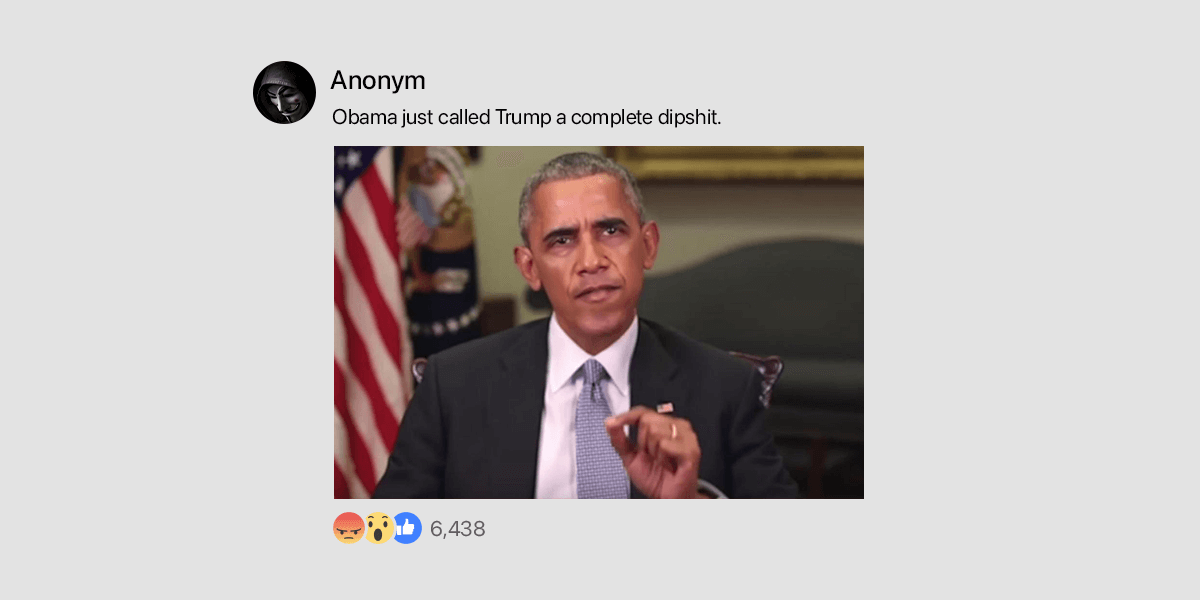

These deepfakes have been used to trick politicians, put the faces of celebrities into pornographic videos, harass and bully people, defraud businesses and spread fake news. Deepfakes pose ethical concerns and societal threats, from potential infringement of image rights to their ability to be used to spread fake news or commit fraud.

Liar's dividend

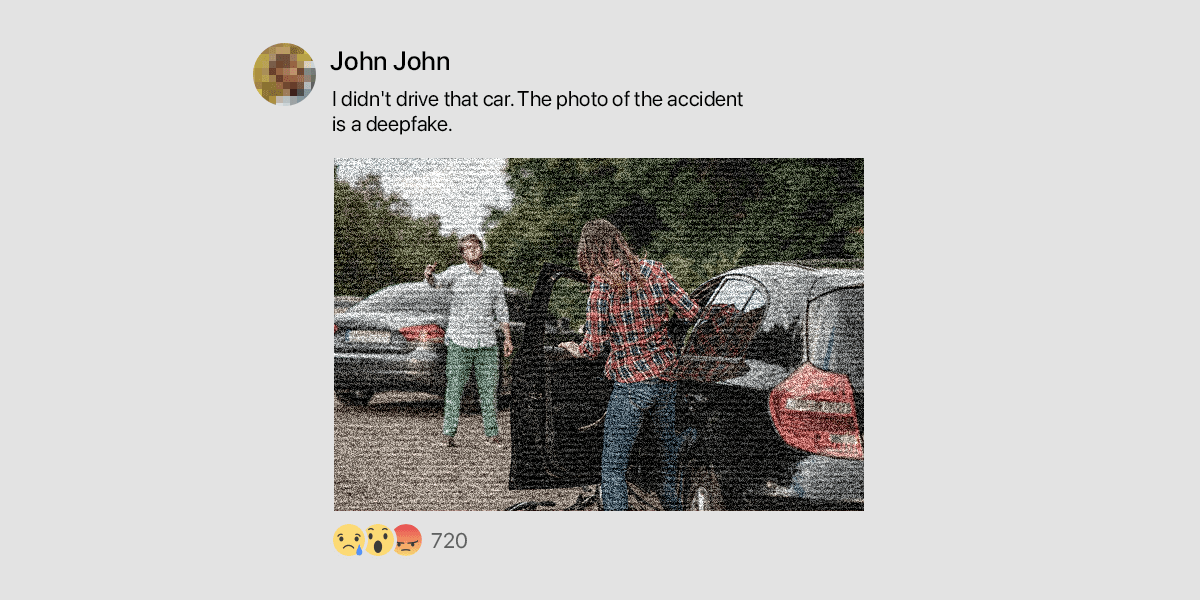

Deepfakes with no attribution are becoming harder to detect, as internet users often have trouble separating truth from fiction and mistakenly call both entertaining and deceiving videos deepfakes. More people are also now calling unflattering videos of themselves deepfakes to evade responsibility for certain actions. This deliberate dismissal of inconvenient facts is known as the liar's dividend.

One of the most famous cases of the liar's dividend is Donald Trump's vulgar remarks about women. The video made quite a sensation during the 2016 presidential race and the future president of the US questioned the authenticity of the footage caught on tape, even claiming it to be fake at one point.

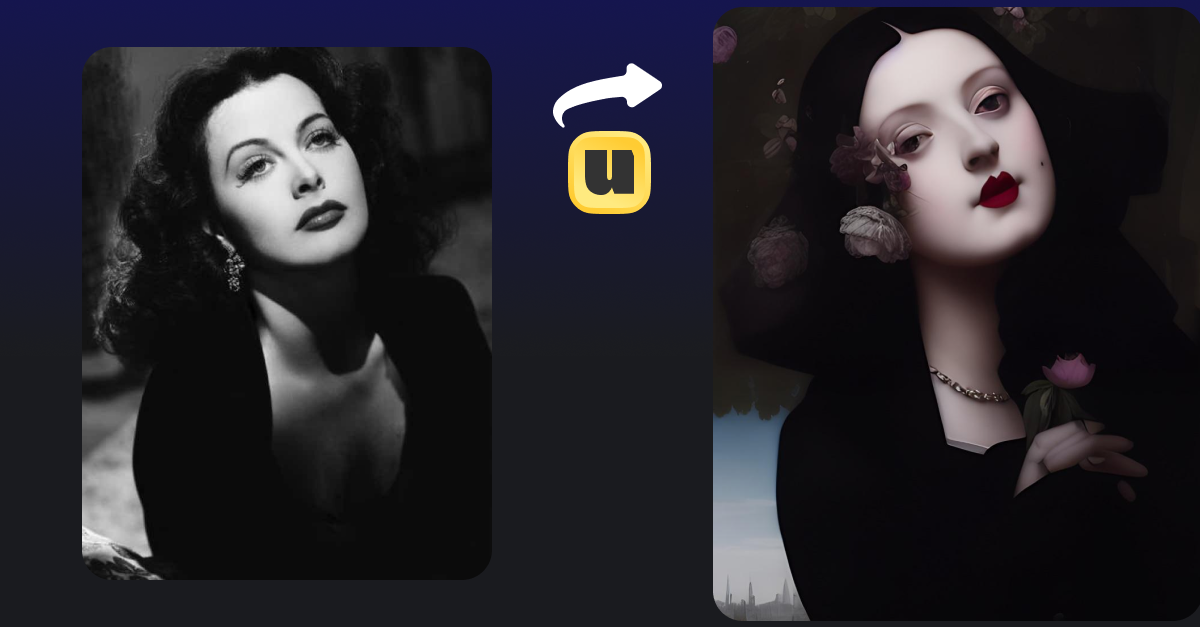

Key Points about Synthetic Media

Synthetic media also refer to content produced artificially, and is an all-encompassing term. Today, synthetic media include AI-written music, imagery and video, voice synthesis, and text. The field of synthetic media is ever-expanding as companies aim to disrupt more and more parts of traditional media, making it easier to create new things.

Deepfakes are a form of synthetic media, distinguished by intent and thus areas of application. Synthetically generated content is broader and is also used for entertainment, educational, and marketing purposes. Synthetic content creators (outside of the deepfake variety) attribute their works and spread them via trusted channels. They may not hide information about their content creation tools and modifications, unlike deepfake creators, who intentionally obscure that context.

In the world of synthetic media, we put our trust in the hands of creators and tools of attribution. For this reason, it is crucial to support the growth of synthetic media and control the proliferation of deepfakes. Content responsibly produced using ML and AI technologies can be safe and transparent for every digital user.

Synthetic media can positively transform content across industries, and it's important to take proactive action to support this process. Companies of all sizes, as well as independent content creators, should partner with regulators to encourage policies that will protect against the threat of deepfakes on the web, join industry initiatives to promote the development and adoption of media attribution and provenance standards and tools, encourage and support education and awareness building initiatives in the synthetic media space, and help create a safe environment for the development of synthetic media.